The Agent Lifecycle: Building, Testing & Iterating for Enterprise‑Grade Reliability

By Teqfocus COE

2nd July, 2025

“AI agents are only as good as your ability to test, monitor, and improve them—consistently.”

From Dreamforce stages to C‑suite off‑sites, autonomous agents have captured imaginations. Yet the gulf between a polished demo and a production‑hardened agent can swallow budgets, brand equity, and trust. Part 9 translates vision into execution: a pragmatic, six‑stage lifecycle for architects, directors, VPs, and CXOs charged with turning AI promise into operational reality.

Why Lifecycle Discipline Decides Winners

|

Failure Mode |

Root Cause |

Down‑Stream Impact |

|

Inaccuracy |

Insufficient test coverage & weak trust layer |

Hallucinations, bad decisions, compliance exposure |

|

Fragility |

Tight coupling to data schemas & business logic |

Breakages after every release or M&A event |

|

Stagnation |

No feedback loop or versioning strategy |

Competitive decay, rising manual overrides |

Salesforce notes that Agentforce now handles 93 % of customer inquiries for marquee brands like Disney—but only after thousands of monitored iterations and guardrail refinements.

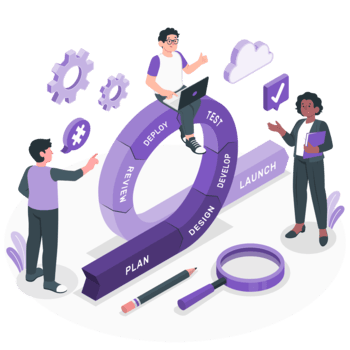

The Six Stages of a High‑Reliability Agent

1. Plan: Pin Down Purpose, Boundaries & KPIs

- Outcome first: e.g., “Reduce average handle time by 20 % in revenue‑cycle operations.”

Decide which archetype you’re building before a single prompt is written.

- Scope: 7–10 tightly defined topics or workflows per agent avoids sprawl.

- Metric design: Hallucination ≤ 1 %, success‑path ≥ 85 %, escalation ≤ 10 %.

- Risk lenses: privacy class of data, regulatory flags, brand‑tone constraints.

Don’t build what you can’t measure.

2. Build: Compose Skills, Prompts & Tools (Modular‑First)

- Agentforce’s low‑code Agent Builder lets solution engineers string together reusable skills (search KB, query CRM, trigger RPA) and custom Apex actions.

- Model‑agnostic scaffolding: As Bret Taylor recently shared in a leading podcast conversation, he warns that tying UX to a single foundation model guarantees expensive re‑platforming. Encapsulate prompts and skills behind an abstraction layer so you can swap GPT‑4o for Llama‑4 or Claude‑Haiku without touching customer flows.

- Prompt plans, not monoliths: Design declarative reasoning steps—easier to debug and audit.

- Reusable libraries: Version skills & intents in a shared repo; downstream agents inherit upgrades automatically.

3. Test: Simulate, Stress‑Test & Explain Reasoning

Before launch, rigorously test for:

- Accuracy & completeness of responses

- Self‑reflection chains that critique their own output—a leading vendor uses this technique to catch hallucinations pre‑production.

- Reason‑trace visibility (“Plan Tracer”) to show decision paths.

- Edge‑case fuzzing and red‑team jailbreak attempts.

4. Deploy: Controlled Roll‑Out, Not Big‑Bang

- Start with a Gold User Group (e.g., 25 senior support analysts).

- Limit channels—internal chat or a sandbox web widget first.

- Feature‑flag new topics for dark launches.

Launch is an open beta, not a finish line.

5. Monitor: Make Drift & Failure Visible

Dashboards should track:

- Task success vs. human baseline

- Escalation & override frequency

- Hallucination heatmap (topic × confidence)

- Latency SLOs (P95, P99)

- Version adoption curves

Continuous Trust Work (CTW): Budget ≈20 % of every sprint for prompt tuning, skill refactors, policy updates, and retraining. Top performers treat CTW like security patching—non‑negotiable maintenance.

6. Improve: Version, Rollback & Evolve

Treat agents as products: backlog, sprints, CI/CD.

- Blue‑green & canary deploys with auto‑rollback on regression.

- Safe‑code modules: Borrow from Bret Taylor’s Rust‑inspired vision (from the same podcast)—skills use type‑checked contracts and formal verification where possible.

- Guardrail drift tests whenever compliance rules change.

Top‑performing agents are never “done.” They’re maintained like products.

Governance & Risk Mitigation Essentials

Enterprise‑grade agents must include:

- Version lineage & immutable history

- Audit trails for every decision & tool call

- Role‑based access, least privilege

- Deterministic fallback / escalation protocols

- Brand‑safety guardrails: Learn from the Air Canada hallucination case—if the agent speaks, the company is liable.

Building AI agents isn’t just automation; it’s intelligent software development.

Executive Takeaways

- Lifecycle Makes or Breaks ROI – 70 % of agent issues surface post‑launch due to missing test coverage or monitoring blind spots.

- Explainability Accelerates Trust – Transparent reasoning shortens InfoSec cycles and board approvals.

- Versioning Is Non‑Negotiable – Rollback paths are a regulatory expectation, not a luxury.

- Supply‑Chain Reality Check – Data, compute, algorithms are external constraints; bake them into lifecycle planning.

- Continuous Trust Work – Budget it explicitly; treat it like patch management for AI.

- Start with a Lighthouse Workflow – Validate the lifecycle before scaling.

Ready to Operationalise Agents That Endure?

Teqfocus partners with enterprises to plan, build, test, and iterate agents that hold up under real‑world scale, complexity, and scrutiny.